A Comprehensive LC-MS Metabolomics Protocol: From Foundational Principles to Advanced Applications and Troubleshooting

This article provides a comprehensive guide to liquid chromatography-mass spectrometry (LC-MS) metabolomics, catering to researchers and drug development professionals.

A Comprehensive LC-MS Metabolomics Protocol: From Foundational Principles to Advanced Applications and Troubleshooting

Abstract

This article provides a comprehensive guide to liquid chromatography-mass spectrometry (LC-MS) metabolomics, catering to researchers and drug development professionals. It covers the foundational principles of global and targeted metabolomics, detailing the complete workflow from experimental design and sample preparation to data acquisition. The protocol delves into advanced methodologies for data processing and metabolite identification, offers practical solutions for troubleshooting large-scale studies and optimizing parameters, and concludes with rigorous procedures for method validation, quantitative analysis, and integration of multi-platform data. The objective is to equip scientists with a robust, end-to-end framework for conducting rigorous and reproducible metabolomics studies.

Understanding LC-MS Metabolomics: Core Concepts and Workflow Design

Metabolomics, the comprehensive study of small molecule metabolites, serves as a critical bridge between genotype and phenotype by providing a direct snapshot of physiological activity within a biological system [1]. Within liquid chromatography-mass spectrometry (LC-MS) metabolomics protocol research, two fundamental analytical strategies have emerged: targeted and untargeted metabolomics. These approaches represent complementary philosophies in experimental design, data acquisition, and biological interpretation [2] [3].

Targeted metabolomics focuses on the precise measurement of a predefined set of chemically characterized metabolites, while untargeted metabolomics aims to comprehensively capture as many metabolites as possible, including unknown compounds [2] [3]. The selection between these methodologies is not merely technical but fundamentally shapes the biological questions that can be addressed, influencing everything from sample preparation to data interpretation [4]. This article delineates the core objectives, applications, and procedural frameworks for both approaches within the context of LC-MS based research.

Core Conceptual Differences and Strategic Objectives

The strategic implementation of targeted versus untargeted metabolomics is guided by their distinct philosophical and operational differences, summarized in Table 1.

Table 1: Fundamental Comparison of Targeted and Untargeted Metabolomics

| Feature | Targeted Metabolomics | Untargeted Metabolomics |

|---|---|---|

| Primary Objective | Hypothesis testing and validation [3] [5] | Hypothesis generation and discovery [3] [5] |

| Analytical Scope | Narrow and focused; dozens to ~100 predefined metabolites [4] [5] | Broad and comprehensive; hundreds to thousands of metabolites, including unknowns [2] [4] |

| Quantification | Absolute quantification using calibration curves and isotope-labeled internal standards [5] [1] | Relative quantification (semi-quantitative); expresses changes as fold-differences [2] [5] |

| Data Complexity | Lower; straightforward analysis of known metabolites [5] | High; requires sophisticated bioinformatics for multivariate statistics and metabolite identification [2] [6] |

| Ideal Application | Validating known biomarkers, tracking specific pathway fluxes, clinical diagnostics [3] [5] | Discovering novel biomarkers, uncovering unexpected metabolic perturbations, global metabolic profiling [2] [3] |

Targeted metabolomics is a hypothesis-driven approach analogous to using a powerful flashlight to examine specific, known details in a room [4]. It leverages prior knowledge of metabolic pathways to precisely quantify a defined set of analytes, often for validation purposes [3] [1]. Its strength lies in its high sensitivity, specificity, and precision enabled by optimization for specific metabolites and the use of authentic isotope-labeled internal standards for absolute quantification [5] [7].

In contrast, untargeted metabolomics is a discovery-oriented approach, equivalent to turning on all the lights in a room to see everything at once, both expected and unexpected [4]. It conducts a global, unbiased analysis without predefining metabolic targets, making it ideal for hypothesis generation and biomarker discovery [2] [3]. This method excels in its ability to measure thousands of metabolites in a single analysis and to detect novel compounds, though it typically provides only relative quantification and suffers from a bias toward detecting higher-abundance metabolites [2] [3].

Experimental Workflows and LC-MS Protocols

The methodological divergence between targeted and untargeted metabolomics necessitates distinct experimental workflows, from sample preparation to data acquisition.

Untargeted Metabolomics Workflow and Protocol

Untargeted metabolomics prioritizes broad metabolite coverage, requiring protocols that preserve chemical diversity.

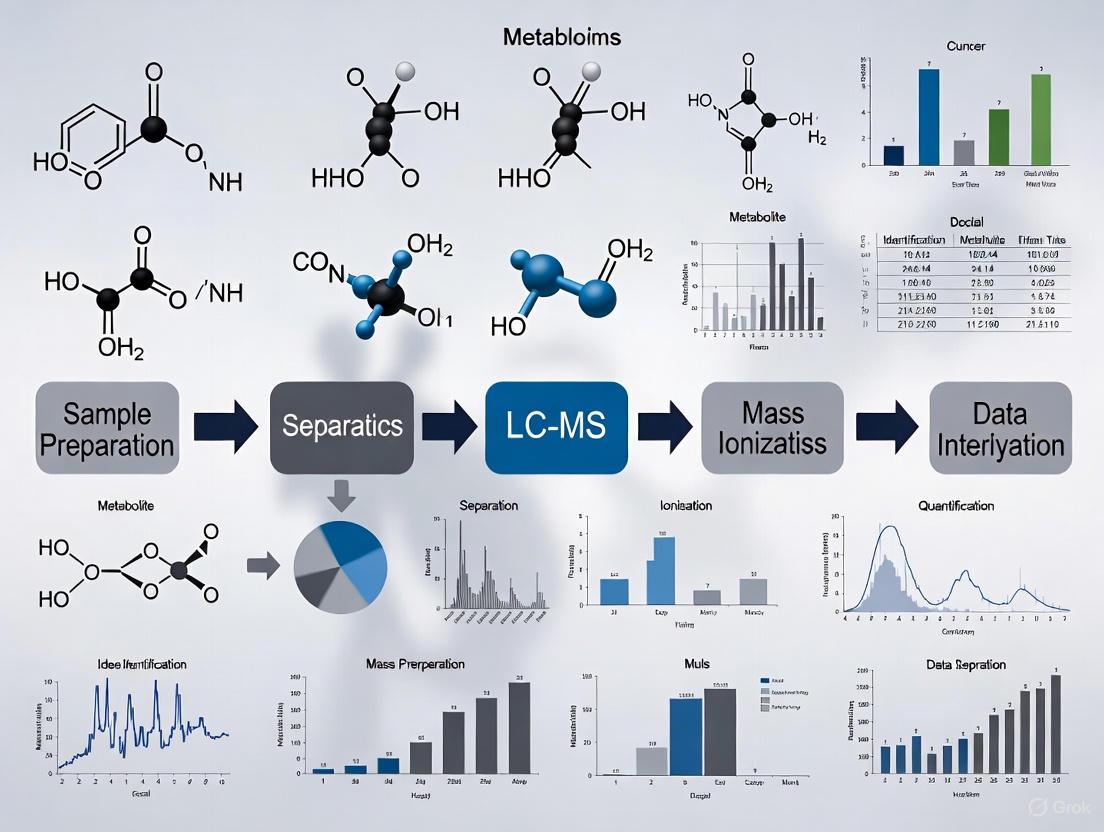

Figure 1: Generalized workflow for untargeted metabolomics, highlighting comprehensive extraction and discovery-driven data processing.

A typical protocol for untargeted analysis of biofluids (e.g., plasma, urine) involves a global metabolite extraction designed to capture a wide physicochemical range [6]. A recommended extraction solvent is acetonitrile:methanol:water with formic acid (e.g., 74.9:24.9:0.2, v/v/v) [6]. Samples are vortexed vigorously and centrifuged to pellet proteins. The supernatant is then analyzed by LC-MS.

For LC-MS analysis, hydrophilic interaction liquid chromatography (HILIC) is often employed for polar metabolites, using a column like a Waters Atlantis HILIC Silica column [6]. Mobile phases typically consist of (A) 10 mM ammonium formate with 0.1% formic acid in water and (B) 0.1% formic acid in acetonitrile [6]. Separation is achieved with a gradient elution. Data acquisition is performed using a high-resolution accurate mass instrument (e.g., Orbitrap or Q-TOF) [6] [8]. A common acquisition mode is Data-Dependent Acquisition (DDA), which selects intense precursor ions for fragmentation to generate MS/MS spectra for annotation [8].

Data processing is a critical step, involving peak picking, retention time alignment, and peak grouping using software like XCMS, MZmine, or commercial platforms [8]. Subsequent statistical analysis (e.g., PCA, PLS-DA) identifies significant features, which are then annotated against metabolic databases [6].

Targeted Metabolomics Workflow and Protocol

Targeted metabolomics employs optimized protocols for specific metabolites, emphasizing precision and accuracy.

Figure 2: Generalized workflow for targeted metabolomics, emphasizing precise quantification using internal standards and MRM.

A protocol tailored for rare cell populations (e.g., 5,000 hematopoietic stem cells) demonstrates key targeted principles [9]. Cells are sorted directly into ice-cold extraction solvent (e.g., acetonitrile). A key step is the addition of authentic isotope-labeled internal standards (AILIS) for each target metabolite before extraction, which corrects for analyte loss and ion suppression, enabling absolute quantification [5] [1].

LC-MS analysis is typically performed using a triple quadrupole (QQQ) mass spectrometer. The critical data acquisition mode is Multiple Reaction Monitoring (MRM), where the first quadrupole (Q1) selects a specific precursor ion for the metabolite, the second (Q2) fragments it, and the third (Q3) selects a unique product ion [1] [7]. This precursor-product ion pair is specific to each metabolite, resulting in high sensitivity and specificity. Chromatographic separation can use HILIC for polar metabolites or reversed-phase chromatography for lipids [1].

Data processing involves integrating chromatographic peaks for each MRM transition. Quantification is achieved by comparing the peak area of the native metabolite to that of its corresponding AILIS and interpolating from a calibration curve [7]. This yields absolute concentrations (e.g., nmol/L), allowing for direct biological interpretation and cross-study comparisons [5].

The Scientist's Toolkit: Essential Research Reagents and Materials

The execution of robust metabolomics studies requires carefully selected reagents and materials. Table 2 outlines key solutions used in the featured protocols.

Table 2: Key Research Reagent Solutions for LC-MS Metabolomics

| Reagent/Material | Function | Application Notes |

|---|---|---|

| Extraction Solvent (ACN:MeOH:FA) [6] | Global metabolite extraction; denatures proteins and solubilizes a wide range of metabolites. | Typical ratio: 74.9:24.9:0.2 (v/v/v). Acetonitrile and methanol should be LC/MS-grade to minimize background noise. |

| Isotope-Labeled Internal Standards (AILIS) [5] [1] | Enables absolute quantification; corrects for matrix effects and analyte loss during sample preparation. | "Authentic" standards (identical chemical structure with stable isotopes) are crucial for high precision and to avoid spurious correlations [5]. |

| HILIC Chromatography Column [6] [8] | Separates polar and hydrophilic metabolites that are poorly retained by reversed-phase columns. | Examples: Waters Atlantis Silica, BEH Amide, ZIC-pHILIC. More sensitive to matrix effects and requires longer equilibration than RP columns [8]. |

| LC Mobile Phase Additives [6] [1] | Enables chromatographic separation and efficient ionization in the mass spectrometer. | 10 mM Ammonium formate and 0.1% formic acid are common. Volatile buffers are essential for LC-MS compatibility. |

| Quality Control (QC) Sample [8] | Monitors instrument performance and corrects for analytical drift during a batch run. | Typically a pooled sample from all study samples or a commercial reference material. Injected repeatedly throughout the analytical sequence. |

Integrated and Advanced Approaches

Recognizing the limitations of both targeted and untargeted methods, researchers increasingly adopt hybrid strategies. Semi-targeted metabolomics represents a middle ground, focusing on a larger, predefined list of targets (e.g., hundreds of metabolites) without a specific hypothesis for each one, thus allowing for both focused analysis and serendipitous discovery [2] [4].

Another powerful strategy is the sequential use of untargeted and targeted methods. Untargeted metabolomics is first used for broad biomarker screening and hypothesis generation. Subsequently, targeted metabolomics is employed to validate the discovered biomarkers with high precision in a larger cohort [2] [3]. This combined approach leverages the strengths of both worlds, facilitating a more complete biological narrative.

Furthermore, the integration of metabolomics with other omics technologies, such as genome-wide association studies (mGWAS), is revealing genetic associations with metabolite levels and providing deeper insights into the causal mechanisms underlying physiology and disease [2]. For data interpretation, enrichment analysis tools like Mummichog, Metabolite Set Enrichment Analysis (MSEA), and Over Representation Analysis (ORA) are used to identify perturbed biological pathways from untargeted datasets, with recent studies indicating Mummichog may perform well for in vitro data [10].

Targeted and untargeted metabolomics are not competing but complementary methodologies within the LC-MS researcher's arsenal. The choice between them is fundamentally dictated by the research question: untargeted for discovery when the biological landscape is unknown, and targeted for validation and precise quantification when specific metabolic entities are of interest. As the field evolves, the integration of these approaches, along with advances in instrumentation and bioinformatics, continues to enhance our ability to decipher the complex language of metabolism, thereby accelerating drug development and deepening our understanding of health and disease.

Liquid Chromatography-Mass Spectrometry (LC-MS) based metabolomics has emerged as a powerful analytical technique for comprehensively profiling small molecules in biological systems. As the final downstream product of the central dogma of molecular biology, metabolites offer a direct reflection of cellular phenotype and physiological status, influenced by genetics, environment, diet, and disease [11]. This application note provides a detailed protocol for the complete LC-MS metabolomics workflow, framed within the context of methodological standardization for drug development and biomedical research. We outline a structured pathway from initial study design to final biological interpretation, emphasizing robust experimental practices and data integrity to ensure reproducible and meaningful results.

The entire LC-MS metabolomics process, from sample collection to data sharing, can be visualized as a cohesive workflow where each stage builds upon the previous one. The following diagram illustrates the logical sequence and interconnections between these critical phases:

Workflow Stages and Methodologies

Stage 1: Study Design

Objective: A well-structured study design is foundational for generating reliable, statistically robust, and interpretable metabolomics data [12].

Protocol:

- Define Research Objective: Clearly articulate the biological question, whether investigating disease mechanisms, drug responses, or metabolic pathway alterations.

- Select Sample Groups: Determine appropriate control and experimental groups with sufficient sample size to achieve statistical power. Common designs include case-control, longitudinal, and cohort studies.

- Choose Biological Matrix: Select appropriate sample types (e.g., plasma, urine, tissue, cell culture) based on the research objective.

- Plan Analytical Approach: Decide between untargeted metabolomics (comprehensive profiling of all measurable metabolites) and targeted metabolomics (quantification of a predefined set of metabolites) [13].

- Incorporate Quality Control (QC): Plan for QC samples, including pooled quality control samples (pooled from all samples), process blanks, and authentic reference standards to monitor instrument performance and data quality throughout the acquisition sequence [12].

Stage 2: Sample Preparation

Objective: To efficiently extract metabolites while preserving their integrity and quantitatively representing the in vivo metabolic state [11] [12].

Protocol:

- Collection & Quenching: Collect samples under standardized conditions to minimize pre-analytical variability. Immediately quench metabolic activity using rapid cooling with liquid nitrogen or chilled organic solvents (e.g., methanol at -80°C) to halt enzyme activity and stabilize the metabolome [11] [12].

- Metabolite Extraction: Employ liquid-liquid extraction with organic solvents to precipitate proteins and isolate metabolites. The choice of solvent dictates metabolite coverage:

- Biphasic solvents (e.g., Methanol/Chloroform/Water): Simultaneously extract polar (into the methanol/water phase) and non-polar metabolites (into the chloroform phase) [11].

- Polar solvents (e.g., Methanol, Acetonitrile): Preferable for extracting hydrophilic metabolites like amino acids and sugars [11].

- Non-polar solvents (e.g., MTBE, Chloroform): Optimal for comprehensive lipidomics analysis [11].

- Add Internal Standards: Spike labeled isotope internal standards into the extraction solvent prior to sample processing. These standards correct for variability in extraction efficiency, matrix effects, and instrument performance, enabling accurate quantification [11].

- Purification & Derivatization: Remove particulate matter via filtration or centrifugation. Derivatization is less common in LC-MS than in GC-MS but may be used to enhance detection for specific compound classes.

Table 1: Common Metabolite Extraction Solvents and Applications

| Solvent Type | Examples | Target Metabolites | Characteristics |

|---|---|---|---|

| Polar | Methanol, Acetonitrile, Water | Amino acids, sugars, nucleotides, organic acids | High polarity, miscible with water, effective for polar metabolites [11] |

| Non-polar | Chloroform, MTBE, Hexane | Lipids, fatty acids, sterols, hormones | Hydrophobic, effective for lipophilic compounds [11] |

| Biphasic/Mixed | Methanol/Chloroform/Water, Methanol/Isopropanol/Water | Broad-range, polar and non-polar | Combination of polar and non-polar properties for comprehensive extraction [11] |

Stage 3: Data Acquisition

Objective: To separate, detect, and measure the mass-to-charge ratio (m/z) and intensity of metabolites in the sample extracts [12].

Protocol:

- Chromatographic Separation: Utilize Liquid Chromatography (e.g., Reversed-Phase, HILIC) to separate metabolites based on chemical properties like polarity, reducing ion suppression and complexity for the mass spectrometer.

- Mass Spectrometry Detection: Analyze metabolites using high-resolution mass spectrometers (e.g., Q-TOF, Orbitrap) for untargeted discovery, or triple-quadrupole instruments (e.g., QqQ) for targeted, high-sensitivity quantification in MRM mode [13].

- Quality Control During Acquisition: Intersperse QC samples throughout the analytical batch:

- Pooled QC Samples: Analyze a sample pooled from all aliquots every 4-10 injections to monitor instrument stability, signal drift, and reproducibility.

- Blanks: Run solvent blanks to identify background contamination and carryover.

- Reference Standards: Use authentic chemical standards for quality control and retention time calibration.

Stage 4: Data Processing

Objective: To convert raw instrumental data into a structured data matrix of features (m/z and retention time pairs) with aligned intensities across all samples [12].

Protocol:

- Peak Picking & Deconvolution: Use software (e.g., XCMS, Progenesis QI, MassHunter) to detect chromatographic peaks and resolve co-eluting ions [14] [13].

- Retention Time Alignment & Peak Matching: Correct for minor shifts in retention time across samples and align features to ensure each metabolite is represented by the same data point in all samples.

- Normalization & Batch Correction: Apply statistical methods to correct for systematic variation from technical artifacts (e.g., instrument drift, batch effects) and biological confounding factors (e.g., urine dilution) [12].

- Imputation: Handle missing values using strategies such as replacement by a minimum value or k-nearest neighbors (KNN) imputation.

Stage 5: Metabolite Identification

Objective: To annotate and identify statistically significant features from the processed data matrix, linking them to known chemical structures [12].

Protocol:

- Spectral Matching: Compare experimental MS/MS spectra against reference spectral libraries (e.g., MassBank, HMDB, GNPS, METLIN). A high spectral match provides a confident annotation [12] [13].

- Database Search by Accurate Mass: Query compound databases using the accurately measured m/z value (typically with mass accuracy < 5-10 ppm) to generate a list of candidate metabolites [13].

- Confidence Levels: Report identification confidence based on the Metabolomics Standards Initiative (MSI) guidelines:

- Level 1: Identified - Matched by two or more orthogonal properties (e.g., accurate mass and MS/MS spectrum) to an authentic standard analyzed in the same laboratory.

- Level 2: Putatively Annotated - Based on spectral similarity to a public library.

- Level 3: Putatively Characterized Compound Class - Based on physicochemical properties or spectral similarity to a characteristic compound class.

- Level 4: Unknown - Distinct but unidentifiable metabolite [12].

Stage 6: Biomarker Identification and Statistical Analysis

Objective: To uncover metabolites that are statistically significantly altered between experimental conditions and have potential diagnostic, prognostic, or therapeutic value.

Protocol:

- Univariate Statistics: Apply t-tests (for two groups) or ANOVA (for multiple groups) to find metabolites with significant abundance changes. Correct for multiple testing using False Discovery Rate (FDR) control [14] [12].

- Multivariate Statistics:

- Unsupervised (e.g., PCA): Used for exploratory data analysis to identify natural clustering, trends, and outliers without prior class labeling [13].

- Supervised (e.g., PLS-DA, OPLS-DA): Used to maximize separation between pre-defined classes and identify features most responsible for the discrimination [14].

- Data Visualization: Utilize specific plots to interpret statistical results:

- Volcano Plot: Visualizes the relationship between statistical significance (-log₁₀(p-value)) and the magnitude of change (log₂(Fold Change)), highlighting metabolites that are both statistically significant and biologically relevant [14] [13].

- Heatmaps: Display the relative abundance of significant metabolites across all samples, often combined with hierarchical clustering to group metabolites with similar profiles [13].

- Machine Learning: Employ algorithms like Random Forest or Support Vector Machines (SVM) for robust biomarker discovery and classification model building [12].

Stage 7: Pathway Interpretation and Integration

Objective: To place the list of significant metabolites and identified biomarkers into a biological context by mapping them onto metabolic pathways.

Protocol:

- Pathway Analysis: Use enrichment analysis (e.g., with MetaboAnalyst) to identify biochemical pathways (from databases like KEGG, Reactome) that are significantly overrepresented in the dataset [12].

- Integration with Other Omics: Correlate metabolomics data with genomics, transcriptomics, and proteomics datasets to obtain a systems-level understanding of biological processes [12].

Stage 8: Data Sharing and Reproducibility

Objective: To ensure the transparency, reproducibility, and reusability of metabolomics data by the scientific community.

Protocol:

- Adhere to the FAIR Principles (Findable, Accessible, Interoperable, Reusable) [12].

- Submit raw and processed data, along with comprehensive metadata (experimental design, sample preparation, analytical methods), to public repositories such as MetaboLights or the Metabolomics Workbench [12].

- Follow community reporting standards for metabolite identification confidence [12].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 2: Key Reagents and Materials for LC-MS Metabolomics

| Item | Function/Application | Examples & Notes |

|---|---|---|

| Internal Standards (Isotope-Labeled) | Correct for technical variability; enable absolute quantification. | ¹³C, ¹⁵N-labeled amino acids, lipids; added prior to extraction [11]. |

| Solvents for Extraction | Protein precipitation and metabolite extraction. | LC-MS grade Methanol, Acetonitrile, Chloroform, Water; form biphasic systems [11]. |

| Authentic Chemical Standards | Metabolite identification (Level 1 confidence) and quantification. | Purchase pure compounds for definitive confirmation of retention time and fragmentation [12]. |

| Quality Control Materials | Monitor instrument performance and data quality. | Pooled QC samples, process blanks, and commercial standard mixes [12]. |

| Chromatography Columns | Separate metabolites prior to MS detection. | Reversed-Phase (C18), HILIC; choice depends on metabolite polarity of interest. |

This application note provides a detailed, step-by-step protocol for the complete LC-MS metabolomics workflow. By adhering to this structured framework—emphasizing rigorous study design, robust sample preparation, comprehensive data processing, and confident metabolite identification—researchers can generate high-quality, reproducible data. The integration of statistical analysis and pathway interpretation ultimately transforms raw spectral data into profound biological insights, accelerating discovery in drug development and biomedical research.

In liquid chromatography-mass spectrometry (LC-MS) metabolomics research, the reliability and validity of findings hinge on a foundation of robust experimental design. The inherent complexity of biological samples, technical variability in analytical platforms, and the multifactorial nature of metabolic responses demand rigorous methodological planning. This document outlines application notes and detailed protocols for three critical steps: determining sample size, implementing proper replication, and executing randomization procedures. Adherence to these principles is mandatory for generating statistically sound, reproducible, and biologically meaningful data in drug development and basic research.

Determining Sample Size for Sufficient Statistical Power

Key Concepts and Definitions

Selecting an appropriate sample size is a critical step that ensures your study has a high probability of detecting scientifically meaningful effects, a property known as statistical power [15]. An underpowered study (with too small a sample size) risks missing true biological effects (Type II errors), while an overly large sample wastes resources and may expose subjects to unnecessary risks [15]. The goal is to find a balance that allows for the detection of meaningful differences with high confidence.

The following parameters are essential for any sample size calculation [16] [15] [17]:

- Statistical Power (1-β): The probability that the test will correctly reject a false null hypothesis (i.e., find a difference when one truly exists). Typically set at 80% or 90%.

- Significance Level (α): The probability of rejecting a true null hypothesis (Type I error, or false positive). Usually set at 0.05 (5%).

- Effect Size: The minimum magnitude of difference or relationship that you want to be able to detect, and that is considered biologically or clinically relevant.

- Population Variability (Standard Deviation): An estimate of the expected variance in your data. This can be obtained from pilot data, previous literature, or estimated.

Application Protocol: Sample Size Calculation for LC-MS Metabolomics

This protocol provides a step-by-step guide for performing an a priori sample size calculation, suitable for a grant application or study plan.

- Step 1: Define the Primary Hypothesis and Analysis Method. Clearly state the primary comparison (e.g., control vs. treated group). The statistical test you plan to use (e.g., t-test, ANOVA, correlation) will determine the exact formula.

- Step 2: Estimate Variability. Use the standard deviation (SD) of your metabolomic feature of interest from a pilot study or prior similar work. If no data is available, use a conservative estimate. High variability requires a larger sample size.

- Step 3: Define the Meaningful Effect Size. Determine the minimum fold-change or difference in metabolite abundance that is biologically significant in your context. A smaller, more precise effect size requires a larger sample.

- Step 4: Set Power and Significance Levels. Conventionally, use a power of 80% or 90% and an alpha of 0.05.

- Step 5: Calculate Sample Size. Use statistical software (e.g., G*Power, R, PASS) or the formula below for a two-group comparison using a t-test:

n = [ (Z_{α/2} + Z_{β})^2 * (σ_1^2 + σ_2^2) ] / Δ^2

Where:

n= sample size per groupZ_{α/2}= Z-value for the desired alpha (1.96 for α=0.05)Z_{β}= Z-value for the desired power (0.84 for 80% power)σ_1, σ_2= estimated standard deviations of the two groupsΔ= the desired effect size to detect

- Step 6: Account for Attrition. Increase the calculated sample size by 10-20% to compensate for potential sample loss, analytical dropouts, or poor data quality.

Table 1: Example Sample Size Requirements for a Two-Group Comparison (t-test) Assumptions: Power=80%, α=0.05, Equal Group Sizes, SD=1.0

| Effect Size (Δ) | Sample Size per Group | Total Sample Size |

|---|---|---|

| 0.5 | 64 | 128 |

| 1.0 | 16 | 32 |

| 1.5 | 8 | 16 |

| 2.0 | 5 | 10 |

The Scientist's Toolkit: Reagents for Sample Size and Power Analysis

| Tool / Reagent | Function in Experimental Design |

|---|---|

| G*Power Software | Free, dedicated software for calculating statistical power and required sample size for a wide range of tests [15]. |

| Pilot Study Materials | Biological reagents and LC-MS consumables used to run a small-scale preliminary experiment to estimate population variability. |

| R / Python Statistical Packages | Programming environments with extensive libraries (e.g., pwr in R) for complex or custom sample size calculations. |

| Internal Metabolomic Database | A historical repository of experimental data from your lab, used to inform realistic estimates of effect sizes and variability. |

Implementing Replication Strategies

Understanding Types of Replication

Replication is the repetition of experimental units or measurements to estimate variability and improve the reliability of inferences. In LC-MS metabolomics, it is critical to distinguish between different types of replication [18].

- Technical Replication: The same biological sample is prepared and/or analyzed multiple times. This helps quantify the variability introduced by the LC-MS platform, sample preparation, and data processing.

- Example: Injecting the same pooled quality control (QC) sample multiple times throughout the run.

- Biological Replication: Different biological subjects (or cultures, etc.) are assigned to the same experimental condition. This accounts for the natural variation within a population and is essential for making inferences about the broader biological population.

- Example: Using 10 different mice from the same strain and treatment group, rather than taking 10 technical measurements from one mouse.

True replication involves applying the same treatment to more than one independent experimental unit. Pseudo-replication, such as making multiple measurements from the same biological subject and treating them as independent, is a common flaw that inflates false confidence [18].

Application Protocol: Designing a Replication Scheme

This protocol ensures that your replication strategy adequately addresses both technical and biological variability.

- Step 1: Define the Experimental Unit. Identify the smallest independent entity to which a treatment is applied (e.g., a single mouse, a single cell culture flask). This dictates what constitutes a biological replicate.

- Step 2: Prioritize Biological Replication. The primary goal is to draw conclusions about a population. Therefore, the budget and resources should first be allocated to a sufficient number of biological replicates, as determined by the sample size calculation.

- Step 3: Incorporate Technical Replication Strategically.

- Replication of Sample Preparation: Process each biological sample independently at least once. Duplicate preparations for a subset of samples can help quantify preparation variance.

- Replication of LC-MS Analysis: The primary technical replication in metabolomics is the repeated analysis of pooled QC samples. These are used to monitor instrument stability, perform batch correction, and model technical variance. It is not necessary to inject every biological sample multiple times.

- Step 4: Randomize the Order. To prevent confounding, the order of sample preparation and MS injection for all biological and technical replicates must be randomized (see Section 4).

The workflow below illustrates the relationship between biological and technical replicates in a typical LC-MS metabolomics experiment:

Randomization Procedures to Control Bias

The Role of Randomization

Randomization is the cornerstone of a valid experiment. It involves allocating experimental units to treatment groups, or ordering analytical runs, using a random mechanism [18] [19]. Its primary purpose is to prevent bias and control for unknown or unmeasured confounding variables (e.g., instrument drift, subtle environmental changes, researcher bias) by spreading their potential effects evenly across all groups [18] [20]. Without randomization, treatment effects can become confounded with other factors, rendering conclusions unreliable [18].

Application Protocol: Randomization in Metabolomic Workflow

This protocol details how to implement randomization at key stages of an LC-MS metabolomics study.

Step 1: Random Assignment of Subjects to Treatment Groups.

- Method: Use a computer-generated random number sequence or a dedicated randomization module in statistical software. Do not allocate based on convenience or subjective judgment.

- Consider Block Randomization: For small studies or to ensure perfect balance over time, use block randomization. This ensures that after every few subjects, the number assigned to each group is equal [19] [20]. Vary the block size to maintain unpredictability.

Step 2: Randomize the Sample Preparation Order.

- After biological samples are collected, the order in which they are processed (e.g., protein precipitation, centrifugation, dilution) should be fully randomized. This prevents batch effects in preparation from being systematically linked to a specific treatment group.

Step 3: Randomize the LC-MS Analysis Order.

- This is critical. The sequence in which samples are injected into the LC-MS must be random. A completely randomized design is ideal but can be logistically challenging [21].

- Practical Alternative: Randomized Block Design: If the experiment must be run over several days (batches), treat "Day" or "Batch" as a blocking factor. Then, randomize the order of samples within each batch. This accounts for day-to-day instrumental variation [18] [21]. Pooled QC samples should be interspersed throughout each batch.

Table 2: Comparison of Randomization Methods for LC-MS Run Order

| Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Complete Randomization | Every sample is assigned a random position in the injection sequence with no restrictions. | Simple, eliminates bias and confounding. | May not balance group representation across an instrument drift gradient. |

| Randomized Block Design | Samples are grouped into batches (blocks), and randomization occurs within each batch. | Controls for known sources of variability like day or batch effects [18]. | Requires careful planning; the blocking factor must be included in final statistical models. |

| Stratified Randomization | Used when assigning subjects to groups; ensures balance of a key covariate (e.g., sex, baseline weight). | Increases comparability of groups for known important factors [19]. | Increases complexity, especially with multiple stratification factors. |

The following diagram summarizes the key stages of the LC-MS metabolomics workflow where randomization and replication must be applied:

In liquid chromatography-mass spectrometry (LC-MS) metabolomics, the pre-analytical phase—encompassing sample collection, handling, and storage—is a fundamental determinant of data quality and reliability. Inappropriate sample collection or storage can introduce high variability, instrument interferences, or metabolite degradation, ultimately compromising data integrity and reproducibility [22]. The reproducibility crisis in biomedical research, notably affecting fields like oncology and psychology, underscores the necessity of rigorous standard operating procedures (SOPs) [23]. This protocol details evidence-based procedures for collecting and handling common biological matrices to minimize pre-analytical variation and ensure the generation of robust, reproducible LC-MS metabolomics data.

Key Pre-Analytical Considerations for Experimental Design

Biological and Environmental Factors

Two fundamental biological factors must be considered during study design, as they significantly influence the metabolome:

- Nutritional Status: The metabolic state of a subject (fed vs. fasting) drastically alters biofluid and tissue metabolomes. For instance, in rodents, 16-hour fasting affects one-third to one-half of monitored serum metabolites, increasing fatty and bile acids while decreasing diet- and gut microbiota-derived metabolites [22]. Fasting plasma is typically used to explore systemic metabolic differences between populations.

- Circadian Rhythms: A large fraction of mammalian metabolism undergoes circadian oscillations. In mice, over 40% of the serum metabolome and 45% of the hepatic metabolome are sensitive to time of day, providing complementary information [22]. Time of collection must be carefully chosen and kept consistent across study days.

General Principles for Sample Handling

The following general principles apply to the handling of all biological matrices in metabolomics studies [22]:

- Temperature Control: During collection, samples must be kept at the lowest possible temperature. Immediate snap-freezing is recommended to quench enzymatic activity and prevent oxidation of labile metabolites.

- Aliquoting: Samples should be aliquoted whenever possible to avoid repeated freeze-thaw cycles, which cause progressive loss of sample quality.

- Long-Term Storage: For long-term preservation before analysis, storage at -80 °C or lower is essential.

Matrix-Specific Collection and Handling Protocols

Blood-Derived Samples (Plasma/Serum)

Blood is a highly informative but metabolically active biofluid that requires rapid processing.

Detailed Protocol:

- Collection: Draw blood into appropriate vacutainers (e.g., EDTA or heparin tubes for plasma; clot activator tubes for serum).

- Processing:

- For Plasma: Centrifuge whole blood at 1,500-2,000 x g for 10 minutes at 4°C within 30 minutes of collection. Carefully collect the supernatant (plasma) without disturbing the buffy coat.

- For Serum: Allow blood to clot at room temperature for 30 minutes, then centrifuge at 1,500-2,000 x g for 10 minutes at 4°C. Collect the supernatant (serum).

- Storage: Immediately aliquot the plasma/serum into cryovials and snap-freeze in liquid nitrogen. Store at -80°C [22] [24].

Urine

Urine is non-invasively collected and provides a historical overview of metabolic events but contains residual enzymatic activity.

Detailed Protocol:

- Collection: Collect urine into sterile containers. Choose between timed collection (to capture specific metabolic events) or 24-hour collection (to eliminate diurnal variability) based on the research question [22].

- Processing: Centrifuge at 2,000-3,000 x g for 10 minutes at 4°C to remove cells, bacteria, and debris. Collect the supernatant.

- Storage: Aliquot the supernatant into cryovials and snap-freeze for storage at -80°C [22].

Tissues (e.g., Liver)

Tissues are highly susceptible to post-collection metabolic changes and require immediate quenching of metabolism.

Detailed Protocol:

- Collection: Rapidly dissect the tissue of interest.

- Quenching and Washing: Immediately rinse the tissue with ice-cold saline or buffer to remove blood. For immediate metabolism quenching, submerge the tissue in liquid nitrogen. Alternatively, use a metal block cooled by liquid nitrogen [22].

- Storage: Store the snap-frozen tissue specimen at -80°C. For homogenization, perform the process under controlled, cold conditions using a bead beater or homogenizer while keeping samples on ice.

Feces

Fecal metabolome serves as a functional readout of gut microbiome activity and is highly sensitive to nutritional challenges [22].

Detailed Protocol:

- Collection: Collect feces into pre-weighed, sterile tubes.

- Homogenization and Aliquotting: For a representative profile, homogenize the entire stool sample or multiple random portions from the specimen before aliquotting.

- Storage: Immediately snap-freeze aliquots and store at -80°C [22].

Cell Cultures

Cell cultures require rapid metabolism quenching to capture the intracellular metabolome accurately.

Detailed Protocol:

- Quenching: At the desired time point, rapidly remove the culture medium. Quench metabolism immediately by washing cells with ice-cold saline and adding a cold extraction solvent (e.g., 80% methanol). Alternatively, directly scrape cells into a cold extraction solvent.

- Collection: Transfer the cell extract containing the metabolites to a tube.

- Storage: Snap-freeze the extracts and store at -80°C [22].

The following workflow summarizes the critical steps from sample collection to data processing:

Figure 1: Workflow for Reproducible Metabolomics Sample Management. This diagram outlines the critical stages from study design to data processing, highlighting steps essential for minimizing degradation and ensuring reproducibility.

Ensuring Reproducibility Through QC and Data Processing

Implementing Quality Control (QC)

Robust quality control is non-negotiable for reproducible metabolomics.

- Internal Standards: Add known amounts of stable isotope-labeled or chemical analogs of endogenous metabolites to all samples during extraction. This corrects for variability in sample preparation and instrument analysis [24].

- Pooled QC Samples: Create a pooled QC sample by combining equal aliquots from all study samples. This pooled QC is analyzed repeatedly throughout the analytical sequence to monitor instrument stability and perform data normalization, correcting for signal drift [25] [24].

Reproducible Data Processing

The choice of data processing software and parameters significantly impacts results. Inconsistency in tools like XCMS and MZmine has been a major roadblock, with studies showing that over half of the features detected may not be shared between different software tools [26].

- Trackable Data Processing: Modern tools like asari are designed with explicit algorithmic frameworks for better provenance tracking. Asari employs a "mass track" concept, performing mass alignment before elution peak detection, which helps avoid errors in feature correspondence common in other software [26].

- Reproducibility Assessment: Statistical methods like the MaRR (Maximum Rank Reproducibility) procedure can be applied to assess the consistency of metabolite measurements across technical or biological replicate samples. This non-parametric approach helps distinguish reproducible from irreproducible signals without relying on strict distributional assumptions [25].

The Scientist's Toolkit: Essential Reagents and Materials

Table 1: Essential Materials for LC-MS Metabolomics Sample Preparation.

| Item | Function | Key Considerations |

|---|---|---|

| Cryogenic Tubes | Long-term sample storage at -80°C. | Use sterile, DNase/RNase-free tubes that can withstand ultra-low temperatures without cracking. |

| Internal Standards | Correction for technical variability during quantification. | Use a mixture of stable isotope-labeled compounds not expected to be in the sample. |

| Cryoprotectants | Protect tissue integrity during freezing. | Options include sucrose, DMSO, or glycerol for specific sample types. |

| Protein Precipitation Solvents | Deproteinization of samples (e.g., plasma). | Cold methanol, acetonitrile, or methanol/acetonitrile mixtures are commonly used. |

| Solid-Phase Extraction (SPE) Cartridges | Clean-up and fractionation to reduce matrix effects. | Select sorbent chemistry (e.g., C18, HILIC) based on the metabolite class of interest. |

Troubleshooting Common Pre-Analytical Challenges

Table 2: Common Pre-Analytical Challenges and Solutions.

| Challenge | Impact on Data | Recommended Solution |

|---|---|---|

| Metabolite Degradation | Loss of labile metabolites, introduction of degradation artifacts. | Maintain cold chain; rapid snap-freezing; use protease/inhibitor cocktails for specific pathways. |

| Matrix Effects | Ion suppression/enhancement during MS analysis, reducing accuracy. | Sample clean-up (e.g., SPE, filtration); use of appropriate internal standards. |

| Batch Variability | Introduces non-biological variance that can obscure true effects. | Randomize sample processing order; use pooled QC samples for batch normalization. |

| Poor Reproducibility | Inability to replicate findings within or across labs. | Adhere to detailed SOPs; implement comprehensive QC; use trackable data processing software. |

Reproducibility in LC-MS metabolomics is not a single step but a philosophy integrated throughout the entire workflow, beginning the moment a sample is collected. By rigorously controlling for biological variables like nutritional status and circadian rhythm, adhering to matrix-specific SOPs for collection and storage, implementing a robust QC system, and utilizing transparent data processing tools, researchers can significantly minimize degradation and variability. These practices form the foundation upon which reliable and impactful metabolomics science is built, ultimately fostering greater trust and enabling more rapid advancement in biomedical research and drug development.

Liquid chromatography-mass spectrometry (LC-MS) has emerged as the cornerstone technique for global metabolomics, enabling the detection of hundreds to thousands of metabolites in a single analytical run [27]. The success of any LC-MS metabolomics study is fundamentally dependent on the sample preparation step, particularly the extraction protocol, which directly influences metabolite coverage, data quality, and analytical reproducibility [27]. Biological samples such as plasma and serum contain proteins and phospholipids that can interfere with LC-MS analysis by causing ion suppression, enhancing matrix effects, and accelerating chromatographic column deterioration [28].

This application note systematically compares three principal extraction methodologies—solvent precipitation, liquid-liquid extraction (LLE), and solid-phase extraction (SPE)—within the context of LC-MS metabolomics protocol research. We provide a detailed comparative analysis based on quantitative performance metrics and offer optimized experimental protocols to guide researchers in selecting and implementing the most appropriate extraction strategy for their specific research objectives. The selection of an optimal extraction method must balance multiple factors, including metabolite coverage, reproducibility, recovery efficiency, and matrix effect minimization [27] [28].

Comparative Analysis of Extraction Techniques

The table below summarizes the key performance characteristics of the three primary extraction methods based on comparative studies in human plasma and serum.

Table 1: Comparison of Metabolite Extraction Methods for LC-MS Metabolomics

| Extraction Method | Metabolite Coverage | Recovery Efficiency | Matrix Effects | Method Repeatability | Sample Consumption | Processing Time |

|---|---|---|---|---|---|---|

| Solvent Precipitation | Broadest coverage [27] | Excellent accuracy [27] | High susceptibility [28] | Outstanding [27] | Moderate | Fastest |

| Liquid-Liquid Extraction | Complementary to solvent methods [28] | Good for lipophilic compounds [29] | Moderate to low [30] | Good | Low to moderate | Moderate |

| Solid-Phase Extraction | Selective coverage [27] | Variable by sorbent [28] | Lowest [28] | Low reproducibility risk [27] | Highest | Longest |

Detailed Method Comparison

Solvent Precipitation remains the most widely used extraction technique in global metabolomics due to its broad metabolite coverage and simplicity [27]. Methanol and methanol/acetonitrile mixtures demonstrate outstanding accuracy and are considered benchmark methods for metabolomics studies [27]. However, this broad specificity results in highly complex samples that can hinder the detection of low abundance metabolites and create significant matrix effects due to co-extraction of interfering compounds [28].

Liquid-Liquid Extraction offers an alternative approach that can provide complementary metabolite coverage when combined with solvent-based methods [28]. Methyl-tert-butyl ether (MTBE) has gained popularity for its ability to extract both polar and non-polar metabolites, demonstrating particular strength in lipidomics applications [28]. The selectivity of LLE can be finely tuned by manipulating solvent polarity and pH, allowing for targeted extraction of specific metabolite classes [29].

Solid-Phase Extraction provides the highest degree of selectivity among the three methods, resulting in significantly reduced matrix effects [28]. SPE methods, particularly mixed-mode phases combining reversed-phase and ion-exchange mechanisms, excel at removing phospholipids—major contributors to ion suppression in LC-MS analysis [30]. While SPE tends to reduce overall metabolite coverage compared to solvent precipitation, it offers superior sample clean-up and can be optimized for specific metabolite classes [27].

Table 2: Optimal Applications for Each Extraction Method

| Research Objective | Recommended Method | Rationale |

|---|---|---|

| Global Untargeted Metabolomics | Methanol precipitation | Broadest metabolite coverage with excellent repeatability [27] |

| Targeted Analysis of Specific Metabolite Classes | Mixed-mode SPE | Enhanced selectivity and reduced matrix effects [30] |

| Lipidomics | MTBE LLE | Optimal recovery of both polar and lipid metabolites [28] |

| High-Throughput Screening | 96-well plate protein precipitation | Rapid processing and easy automation [30] |

| Matrix-Sensitive Analyses | Phospholipid removal SPE | Significant reduction of ion suppression [30] |

Experimental Protocols

Methanol-Based Solvent Precipitation Protocol

Principle: This method utilizes cold organic solvents to precipitate proteins while maintaining metabolite integrity, providing the broadest metabolite coverage for untargeted metabolomics [27].

Reagents and Materials:

- LC-MS grade methanol (pre-chilled to -20°C)

- Pooled human plasma or serum samples

- Internal standard mixture (e.g., stable isotope-labeled compounds)

- Microcentrifuge tubes (1.5 mL)

- Centrifuge capable of 14,000 × g

- Nitrogen evaporator

- Vortex mixer

Critical Steps and Optimization:

- Sample Preparation: Thaw plasma/serum samples on ice and vortex thoroughly before aliquoting.

- Precipitation: Maintain solvents at -20°C before use to ensure efficient protein precipitation and minimize enzymatic activity.

- Centrifugation: Ensure temperature-controlled centrifugation at 4°C to maintain metabolite stability.

- Reconstitution: Use initial mobile phase composition for reconstitution to ensure compatibility with LC-MS analysis.

Method Notes: This protocol demonstrates outstanding repeatability and is considered the gold standard for global metabolomics [27]. For enhanced coverage of lipids, a modified protocol using methanol/MTBE (1:3) can be employed [28].

Mixed-Mode Solid-Phase Extraction Protocol

Principle: Mixed-mode SPE utilizes sorbents with multiple retention mechanisms (reversed-phase and ion-exchange) to selectively isolate metabolite classes while effectively removing phospholipids and other interferents [30].

Reagents and Materials:

- Mixed-mode SPE cartridges (e.g., Oasis MCX or MAX, 30 mg/1 mL)

- LC-MS grade methanol, water, and ammonium hydroxide

- Formic acid (LC-MS grade)

- Vacuum manifold for SPE processing

- Nitrogen evaporator

Critical Steps and Optimization:

- Conditioning: Ensure proper cartridge conditioning to activate the sorbent for optimal recovery.

- Sample Loading: Dilute plasma with water (1:2) to ensure proper retention of polar metabolites.

- Selective Elution: Employ stepwise elution with pH adjustment to fractionate metabolite classes (acidic, basic, neutral).

- Eluate Handling: Evaporate eluents immediately after collection to prevent metabolite degradation.

Method Notes: Mixed-mode SPE provides excellent removal of phospholipids, significantly reducing matrix effects in LC-MS analysis [30]. While overall metabolite coverage may be lower than solvent precipitation, the improved data quality and reduced ion suppression make it ideal for targeted analyses [28].

Methyl-Tert-Butyl Ether (MTBE) Liquid-Liquid Extraction Protocol

Principle: MTBE-based LLE leverages the differential solubility of metabolites in immiscible solvents to extract both polar and non-polar compounds, making it particularly suitable for lipidomics and broad-spectrum metabolite analysis [28].

Reagents and Materials:

- LC-MS grade MTBE, methanol, and water

- Internal standard mixture

- Glass centrifuge tubes (recommended for organic solvents)

- Centrifuge capable of 3,000 × g

- Vortex mixer

- Nitrogen evaporator

Procedure:

- Sample Preparation: Aliquot 50 μL of plasma/serum into a glass centrifuge tube.

- Methanol Addition: Add 150 μL of methanol, vortex for 30 seconds to precipitate proteins.

- MTBE Addition: Add 500 μL of MTBE, vortex vigorously for 1 minute.

- Phase Separation: Add 125 μL of water to induce phase separation, vortex for 30 seconds.

- Centrifugation: Centrifuge at 3,000 × g for 10 minutes at 4°C.

- Fraction Collection:

- Collect the upper organic phase (MTBE layer) containing lipids and non-polar metabolites.

- Collect the lower aqueous phase (methanol/water layer) containing polar metabolites.

- Concentration: Evaporate both fractions to dryness under a stream of nitrogen.

- Reconstitution: Reconstitute the organic fraction in 50 μL isopropanol/acetonitrile (1:1) and the aqueous fraction in 50 μL initial mobile phase for LC-MS analysis.

Method Notes: MTBE extraction provides a comprehensive approach for simultaneous analysis of polar and non-polar metabolomes [28]. The partitioning behavior can be optimized by adjusting the ratio of organic to aqueous solvents based on the LogP values of target analytes [29].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Reagents and Materials for Metabolite Extraction

| Item | Function | Application Notes |

|---|---|---|

| LC-MS Grade Methanol | Protein precipitant and extraction solvent | Provides broad metabolite coverage; pre-chill to -20°C for optimal protein precipitation [27] [31] |

| LC-MS Grade Acetonitrile | Protein precipitant | Effective for phospholipid removal; often used in combination with methanol [27] [30] |

| Methyl-Tert-Butyl Ether (MTBE) | LLE solvent | Excellent for simultaneous extraction of polar and non-polar metabolites [28] |

| Mixed-Mode SPE Cartridges | Selective metabolite isolation | Combine reversed-phase and ion-exchange mechanisms for enhanced selectivity [30] |

| Stable Isotope-Labeled Internal Standards | Quality control and quantification correction | Essential for monitoring extraction efficiency and correcting for matrix effects [32] |

| Formic Acid and Ammonium Hydroxide | pH adjustment for SPE | Critical for controlling ionization state and retention of metabolites in mixed-mode SPE [28] |

| Phospholipid Removal Plates | Specific phospholipid removal | Zirconia-coated silica plates selectively remove phospholipids to reduce matrix effects [30] |

Method Selection and Integration Strategy

Decision Framework for Method Selection

Choosing the appropriate extraction method requires careful consideration of research goals, sample type, and analytical resources. The following framework provides guidance for method selection:

For Untargeted Discovery Studies: Methanol precipitation should be the default choice due to its extensive metabolite coverage and proven reliability [27]. The minimal sample manipulation preserves a comprehensive metabolite profile, making it ideal for hypothesis-generating research.

For Targeted Quantification: SPE methods, particularly mixed-mode approaches, offer superior performance for quantitative analysis by significantly reducing matrix effects and improving method sensitivity [30]. The selective nature of SPE provides cleaner extracts, resulting in enhanced signal-to-noise ratios for target analytes.

For Specialized Applications: LLE with MTBE excels in lipidomics and when analyzing both polar and non-polar metabolites simultaneously [28]. The ability to partition metabolites based on polarity facilitates class-specific analysis and can be further optimized using salting-out approaches (SALLE) for hydrophilic compounds [29].

Orthogonal Method Integration

For studies requiring maximal metabolome coverage, integrating multiple orthogonal extraction methods can significantly increase metabolite detection. Research demonstrates that combining methanol precipitation with ion-exchange SPE or MTBE LLE can increase metabolite coverage by 34-80% compared to any single method [28]. This approach, while increasing MS analysis time and sample consumption, provides the most comprehensive view of the metabolome.

The selection of an appropriate metabolite extraction strategy is a critical determinant of success in LC-MS metabolomics. Solvent precipitation methods, particularly methanol-based protocols, provide the broadest metabolite coverage and outstanding repeatability, making them ideal for untargeted discovery studies. SPE techniques offer enhanced selectivity and significantly reduced matrix effects, beneficial for targeted quantification. LLE methods, especially MTBE-based protocols, provide complementary coverage and are particularly well-suited for lipidomics applications.

Researchers should align their extraction strategy with specific research objectives, considering the trade-offs between metabolite coverage, matrix effects, and processing complexity. For the most comprehensive metabolomic analysis, integrating orthogonal extraction methods can substantially increase metabolite coverage, providing a more complete picture of the biological system under investigation.

Executing the LC-MS Workflow: From Sample Preparation to Data Acquisition

Liquid chromatography-mass spectrometry (LC-MS) metabolomics has become a cornerstone of modern biological and clinical research, providing a direct readout of physiological and pathological states by comprehensively measuring small molecules. The sample preparation stage, particularly metabolite extraction from complex biofluids like plasma, is a critical pre-analytical step that profoundly influences data quality, reproducibility, and biological interpretation. The selection of an appropriate extraction method must balance multiple competing factors: comprehensiveness of metabolite coverage, extraction efficiency, method repeatability, and the minimization of matrix effects [27] [33].

This application note systematically evaluates and compares seven solvent-based and solid-phase extraction methods for LC-MS metabolomics analysis of human plasma. Framed within a broader thesis on protocol standardization, this work provides researchers and drug development professionals with detailed, evidence-based protocols and performance data to facilitate the rational design of metabolomics workflows, thereby enhancing the impact and reliability of their research [27].

Critical Comparison of Extraction Method Performance

A rigorous assessment of seven common extraction methods was conducted using standard analytes spiked into both buffer and human plasma. The evaluated methods included three conventional solvent precipitations (Methanol, Methanol/Ethanol, Methanol/MTBE), one liquid-liquid extraction (LLE with MTBE), and three solid-phase extraction (SPE) protocols (C18, Mixed-Mode Ion-Exchange (IEX), and Divinylbenzene-Pyrrolidone (PEP2)) [28]. Performance was measured against key metrics including absolute recovery, matrix effects, repeatability, and metabolite coverage in combination with reversed-phase (RP) and mixed-mode (IEX/RP) LC-MS analyses.

Table 1: Comprehensive Performance Metrics of Seven Extraction Methods for Plasma Metabolomics

| Extraction Method | Average Recovery (%) | Matrix Effects (Signal Suppression, %) | Method Repeatability (%RSD) | Metabolite Coverage | Key Strengths | Key Limitations |

|---|---|---|---|---|---|---|

| Methanol Precipitation | 80-120 [34] | High [28] | Outstanding [27] | Broad, high for polar metabolites [27] [28] | Broad specificity, outstanding accuracy, simple protocol [27] | High matrix effects, highly complex sample [28] |

| Methanol/Ethanol Precipitation | Similar to Methanol [28] | High [28] | Excellent [28] | Wide, comparable to Methanol [28] | Excellent precision, wide selectivity [28] | High ion suppression |

| Methanol/MTBE Precipitation | Similar to Methanol [28] | High [28] | Excellent [28] | Wide [28] | Good for polar & lipid metabolomes [28] | High solvent consumption |

| MTBE LLE | Variable (6-93%) [35] | ~50% post-EME [35] | 2-15% [35] | Orthogonal to Methanol [28] | Good for polar & lipid metabolomes, robotic compatibility [28] | Variable recovery |

| C18 SPE | Selective | Lower than solvents [28] | Good [27] | Selective for non-polar metabolites | Reduced matrix effects, clean extracts | Lower overall metabolite coverage [27] |

| Mixed-Mode IEX SPE | Selective | Lower [28] | Good [27] | Orthogonal, good for ionic metabolites [28] | High orthogonality to solvent methods [27] | Low reproducibility risk, more selective [27] |

| PEP2 SPE | Selective | Lower [28] | Good [27] | Moderate | Reduced phospholipids, good repeatability [27] | More selective, lower coverage [27] |

The data in Table 1 reveals that no single extraction method is superior across all metrics. Methanol-based solvent precipitation provides the best combination of broad metabolite coverage and high repeatability, confirming its status as a default method for global metabolomics [27] [28]. However, this broad specificity comes at the cost of significant matrix effects due to the co-extraction of interfering compounds, particularly phospholipids, which can suppress ionization of lower-abundance metabolites [28].

SPE methods, while generally more selective and resulting in lower metabolite coverage, produce cleaner extracts with significantly reduced matrix effects. Among SPE variants, mixed-mode IEX demonstrated the highest orthogonality to methanol-based methods, making it a strong candidate for sequential extraction protocols aimed at maximizing total metabolome coverage [28]. The MTBE-based LLE also showed good orthogonality and is particularly valuable for workflows targeting both polar and lipid metabolites [28].

Table 2: Orthogonality and Synergistic Potential of Extraction Methods

| Method Combination | Increase in Metabolite Coverage vs. Best Single Method | Recommended Application |

|---|---|---|

| Methanol + IEX SPE | High | Maximizing coverage for discovery-phase studies |

| Methanol + MTBE LLE | High [28] | Integrated polar and lipid metabolomics |

| Methanol + C18 SPE | Moderate | Studies focusing on non-polar metabolite classes |

| Single Methanol Method | Baseline (0%) | High-throughput targeted analyses or resource-limited studies |

The combination of multiple, orthogonal extraction methods can increase metabolome coverage by 34-80% compared to the best single extraction protocol, albeit with a corresponding increase in MS analysis time and sample consumption [28]. This strategy is particularly powerful for untargeted discovery-phase studies where comprehensive metabolite detection is the primary objective.

Detailed Experimental Protocols

Recommended Standardized Workflow

The following diagram illustrates the generalized workflow for metabolite extraction from plasma, common to all methods detailed in the subsequent sections.

Protocol 1: Methanol Precipitation (Monophasic)

This method is recommended for most untargeted profiling studies due to its broad specificity and high reproducibility [27].

- Step 1: Thaw plasma samples on ice or at 4°C. Vortex thoroughly before aliquoting.

- Step 2: Pipette 50 µL of plasma into a pre-cooled microcentrifuge tube.

- Step 3: Add 150 µL of cold (-20°C) LC/MS-grade methanol containing appropriate internal standards (e.g., stable isotope-labeled amino acids). Vortex vigorously for 30-60 seconds.

- Step 4: Incubate the mixture for 20 minutes at -20°C to facilitate protein precipitation.

- Step 5: Centrifuge at >14,000 × g for 15 minutes at 4°C.

- Step 6: Carefully transfer the supernatant to a new LC-MS vial. The pellet, containing precipitated proteins, is discarded.

- Step 7 (Optional): Evaporate the supernatant under a gentle stream of nitrogen or using a vacuum concentrator. Reconstitute the dried extract in a volume of initial mobile phase suitable for your LC-MS system (e.g., 100 µL of water:acetonitrile, 95:5). Vortex thoroughly before analysis.

Protocol 2: Matyash/MTBE Extraction (Biphasic)

This method is ideal for simultaneous extraction of polar metabolites and lipids, providing a more comprehensive view of the metabolome [36].

- Step 1: Aliquot 40 µL of plasma into a glass tube.

- Step 2: Add 150 µL of LC/MS-grade methanol (with 1 mM BHT as antioxidant) and spike with internal standards. Vortex well.

- Step 3: Add 500 µL of methyl tert-butyl ether (MTBE). Vortex continuously for 1 hour at room temperature.

- Step 4: Add 125 µL of LC/MS-grade water to induce phase separation. Vortex briefly and incubate on ice for 10 minutes.

- Step 5: Centrifuge at 2,000 × g for 10 minutes at room temperature. This will result in a three-phase system: a lower aqueous phase (polar metabolites), an upper organic phase (lipids), and a protein pellet at the interface.

- Step 6: Carefully collect the upper organic phase (lipids) and the lower aqueous phase (polar metabolites) into separate vials.

- Step 7: Dry both fractions under nitrogen or vacuum. Reconstitute each in solvents compatible with your chosen LC-MS method (e.g., methanol for lipids, water:acetonitrile for polar metabolites).

Protocol 3: Mixed-Mode Ion-Exchange (IEX) Solid-Phase Extraction

Use this method as an orthogonal technique to methanol precipitation to increase coverage of ionic metabolites [28].

- Step 1: Pre-condition the IEX SPE sorbent (e.g., divinylbenzene conjugated with sulfonic acid and quaternary amine moieties) with 1 mL of methanol.

- Step 2: Equilibrate the sorbent with 1 mL of water.

- Step 3: Load 50 µL of plasma (pre-diluted 1:1 with water or a weak acid/base depending on target metabolite class) onto the SPE cartridge.

- Step 4: Wash with 1-2 mL of water or a mild buffer (e.g., 5 mM ammonium acetate) to remove unretained neutral compounds and salts.

- Step 5: Elute retained metabolites sequentially using solvents of increasing strength and pH manipulation. A typical sequence might be:

- Elution 1 (Acidic Analytics): 1 mL of methanol with 2% formic acid.

- Elution 2 (Basic Analytics): 1 mL of methanol with 5% ammonium hydroxide.

- Step 6: Combine or keep separate eluates based on analytical needs. Dry under nitrogen or vacuum and reconstitute in LC-MS compatible solvent.

The Scientist's Toolkit: Essential Research Reagents & Materials

Successful and reproducible metabolomics sample preparation relies on the use of high-quality, MS-compatible materials. The following table lists essential reagents and materials.

Table 3: Essential Research Reagents and Materials for Plasma Metabolite Extraction

| Item Name | Specification / Example | Critical Function | Notes for Use |

|---|---|---|---|

| Plasma Samples | Collected with EDTA or heparin anticoagulant; stored at -80°C | Primary biological matrix | Avoid repeated freeze-thaw cycles; thaw on ice [33] |

| LC-MS Grade Methanol | Optima LC/MS grade or equivalent | Primary extraction solvent, protein precipitation | High purity minimizes background interference |

| LC-MS Grade Acetonitrile | Optima LC/MS grade or equivalent | Modifies extraction selectivity | Often used in combination with methanol (e.g., 1:1) [27] |

| MTBE (Methyl tert-butyl ether) | HPLC grade or higher | Organic solvent for biphasic LLE | Less dense than water; organic layer forms on top [36] |

| Internal Standards | Stable isotope-labeled metabolites (e.g., L-Phenylalanine-d8, L-Valine-d8) [6] | Monitors extraction efficiency & data normalization | Should be added at the very beginning of extraction [6] |

| Formic Acid | Optima LC/MS grade, 99%+ | Mobile phase additive, aids ionization | Typically used at 0.1% in mobile phases [6] |

| Ammonium Formate/Acetate | LC-MS grade, 99%+ | Mobile phase buffer for improved chromatography | Typically used at 5-10 mM concentration [6] |

| SPE Cartridges | Mixed-mode IEX, C18, PEP2 chemistries | Selective clean-up and fractionation | IEX provides high orthogonality to solvent methods [28] |

The optimal choice of metabolite extraction method is dictated by the specific goals of the study. Based on the systematic comparison presented herein, the following evidence-based recommendations are proposed:

- For Global Untargeted Metabolomics: Methanol precipitation remains the recommended starting point due to its broad metabolite coverage, simplicity, and high reproducibility [27]. Researchers should be aware of its susceptibility to matrix effects and employ appropriate internal standards to correct for ionization suppression [28].

- For Maximum Metabolome Coverage: For discovery-phase research where comprehensiveness is the priority, a sequential extraction protocol combining methanol precipitation with an orthogonal method like mixed-mode IEX SPE or MTBE LLE is highly recommended. This approach can increase coverage by over 30% compared to a single method [28].

- For High-Throughput Targeted Analysis: Methanol precipitation is also suitable for targeted assays, but SPE methods or novel techniques like Electromembrane Extraction (EME) should be considered if the target analytes are known to suffer from significant matrix effects in solvent-only extracts [35]. EME, for instance, can drastically reduce matrix effects even with limited sample dilution [35].

- For Integrated Polar and Lipid Metabolomics: The Matyash/MTBE biphasic extraction provides a robust and efficient single-protocol solution for simultaneous preparation of both polar and lipid fractions, simplifying the workflow and reducing sample consumption [36].

In conclusion, the rational selection and application of these optimized extraction protocols, tailored to the analytical objectives, will significantly enhance the quality and biological relevance of data generated in LC-MS metabolomics studies, thereby strengthening the foundation for subsequent biomarker discovery, drug development, and clinical translation.

In liquid chromatography-mass spectrometry (LC-MS) metabolomics, the selection of the chromatographic mode is a fundamental determinant of the coverage and quality of the analytical data. Reversed-phase (RP) chromatography has long been the default mode for many LC-MS applications due to its robustness, high separation efficiency, and straightforward compatibility with electrospray ionization (ESI) [37]. However, its limitations in retaining highly polar metabolites have encouraged the adoption of alternative techniques. Hydrophilic interaction liquid chromatography (HILIC) has emerged as a powerful complementary technique, offering orthogonal selectivity for polar and ionizable compounds that are often poorly retained in RP [37]. A comprehensive LC-MS metabolomics protocol should leverage the strengths of both RP and HILIC to achieve maximal coverage of the metabolome, which encompasses a vast diversity of physicochemical properties [37] [38]. This application note provides a detailed comparison and protocols for implementing these two chromatographic modes.

Fundamental Principles and Separation Mechanisms

Reversed-Phase (RP) Chromatography

The retention mechanism in RP chromatography is primarily driven by hydrophobic interactions between analytes and the non-polar stationary phase. Common stationary phases include C18, C8, and phenyl columns. Analytes are eluted using a gradient that starts with a predominantly aqueous mobile phase and increases the proportion of an organic solvent, typically methanol or acetonitrile. This environment is highly compatible with ESI-MS, providing good ionization efficiency for a wide range of mid- to non-polar compounds [37].

Hydrophilic Interaction (HILIC) Chromatography

HILIC functions through a more complex, multimodal retention mechanism. It employs a polar stationary phase (e.g., bare silica, amide, zwitterionic) and a mobile phase with a high proportion of an organic solvent, usually acetonitrile. The primary mechanism is the partition of analytes between the bulk organic-rich mobile phase and a water-enriched layer that forms on the surface of the stationary phase [37] [39]. Additional interactions, such as ionic exchange, dipole-dipole interactions, and hydrogen bonding, also contribute to retention, depending on the stationary phase chemistry and mobile phase conditions [37] [40]. The high organic content of HILIC mobile phases enhances ESI-MS sensitivity by improving desolvation and ionization efficiency [37] [39].

Table 1: Core Principles of Reversed-Phase and HILIC Chromatography

| Feature | Reversed-Phase (RP) | Hydrophilic Interaction (HILIC) |

|---|---|---|

| Retention Mechanism | Hydrophobic partitioning | Hydrophilic partitioning, hydrogen bonding, ionic interactions |

| Stationary Phase | Non-polar (C18, C8) | Polar (silica, amide, zwitterionic, diol) |

| Typical Mobile Phase | Water-methanol or water-acetonitrile gradient | High acetonitrile (60-95%) to aqueous buffer gradient [39] |

| Elution Order | Polar compounds elute first | Non-polar compounds elute first |

| Ideal for Analytes | Mid- to non-polar molecules | Polar and ionizable molecules [37] |

Comparative Performance in Metabolomics Applications

Analyte Coverage and Selectivity

The orthogonality of RP and HILIC selectivity is their greatest advantage when used together. RP chromatography excels at separating lipids, non-polar secondary metabolites, and other hydrophobic compounds. In contrast, HILIC is indispensable for retaining polar metabolites such as amino acids, nucleosides, nucleotides, organic acids, saccharides, and neurotransmitters, which often elute in or near the void volume in RP [37] [40]. Research has shown that integrating HILIC-MS into a metabolomics workflow significantly broadens metabolome coverage compared to using reversed-phase LC-MS alone [37] [38].

Chromatographic Performance and Sensitivity

RP chromatography is generally characterized by high separation efficiency and sharp peak shapes, leading to high peak capacity [37] [41]. HILIC can sometimes suffer from broader peaks and longer equilibration times [37] [41]. However, regarding sensitivity, HILIC often provides an advantage for polar analytes. The organic-rich mobile phase promotes superior desolvation and ionization in the ESI source, frequently resulting in lower limits of detection for polar metabolites compared to RP [39]. A systematic evaluation found that HILIC conditions can lead to a substantial improvement in sensitivity for a large variety of compounds [39].

Matrix Effects and Practical Considerations

Matrix effects, the suppression or enhancement of ionization by co-eluting compounds, are a critical consideration in LC-MS. The extent of matrix effects is highly dependent on the sample matrix, sample clean-up, and chromatographic mode. One study systematically evaluated matrix effects in plasma and urine and found that the optimal combination of stationary phase and mobile phase pH differed between RPLC and HILIC [39]. HILIC can be particularly beneficial when coupled with simple protein precipitation, as the eluate is compatible with the high organic starting mobile phase, eliminating the need for solvent evaporation and reconstitution [39].

Table 2: Performance Comparison for Metabolomics

| Performance Metric | Reversed-Phase (RP) | Hydrophilic Interaction (HILIC) |

|---|---|---|

| Peak Shape & Efficiency | Typically sharp peaks, high efficiency [41] | Can exhibit broader peaks; improved with additives like phosphate [37] |

| Sensitivity (ESI-MS) | Good for a wide range of compounds | Often enhanced for polar compounds due to high organic content [37] [39] |

| Equilibration Time | Relatively fast | Can be longer [41] |

| Tolerance to Sample Solvent | Critical (should be weak solvent) | Critical (should be strong solvent) |

| Handling of Matrix Effects | Well-documented; depends on cleanup | Can be different from RP; requires evaluation [39] |

Experimental Protocols for LC-MS Metabolomics

Sample Preparation for Cultured Cells

The following protocol, adapted for targeted amino acid analysis, outlines a robust workflow for adherent mammalian cells [38].

Quenching and Metabolite Extraction:

- Aspirate the culture medium and quickly wash the cell layer with ice-cold saline solution.

- Immediately add ice-cold 100% methanol (e.g., 1 mL per 10 cm² culture dish) to quench cellular metabolism.

- While the dish is on a bed of ice, use a cell scraper to harvest the cells directly into the quenching solvent.

- Transfer the cell suspension to a pre-cooled microcentrifuge tube.

- Vortex thoroughly and incubate on dry ice or at -80°C for 10 minutes to complete protein precipitation.

- Centrifuge at 4°C (e.g., 13,000-16,000 × g for 10-15 minutes) to pellet insoluble material and proteins.

- Carefully collect the supernatant, which contains the extracted metabolites, into a new tube.

Sample Normalization and Analysis: